SAM2Act:

SAM2Act:

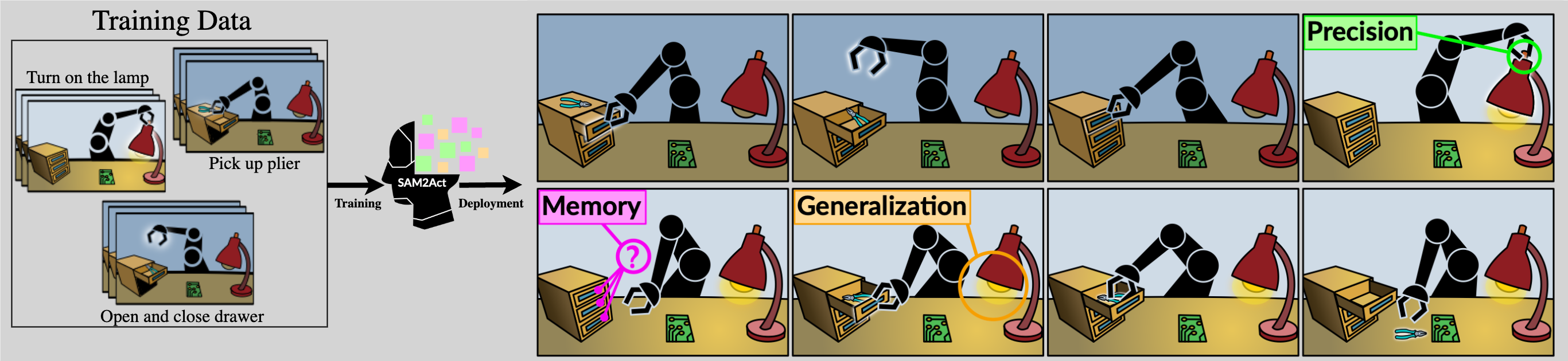

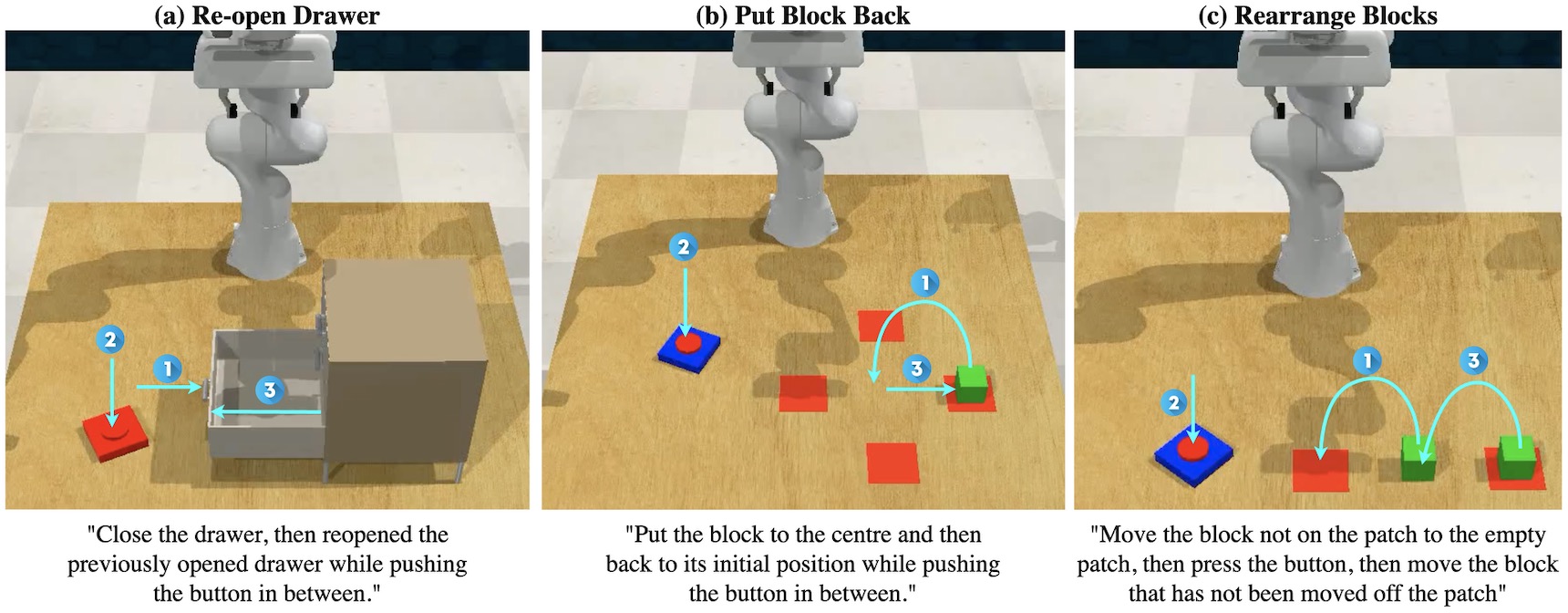

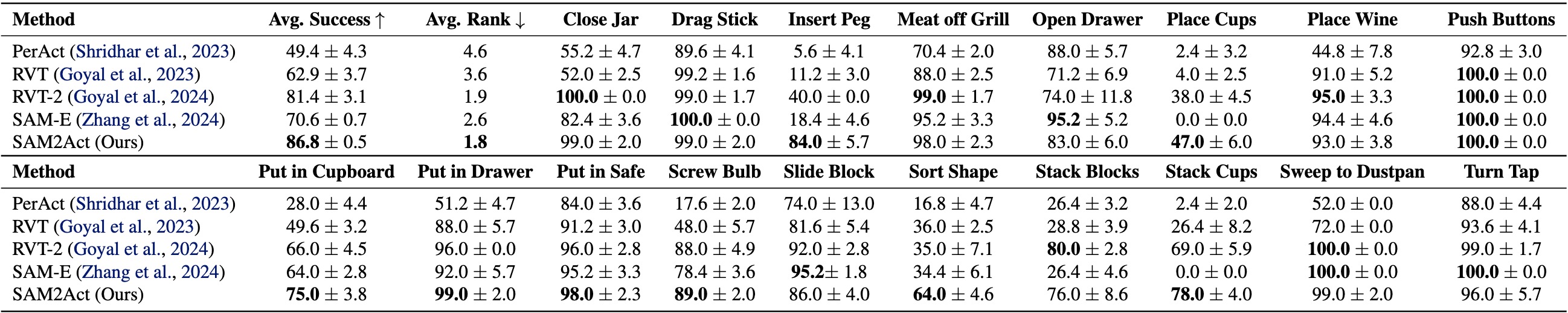

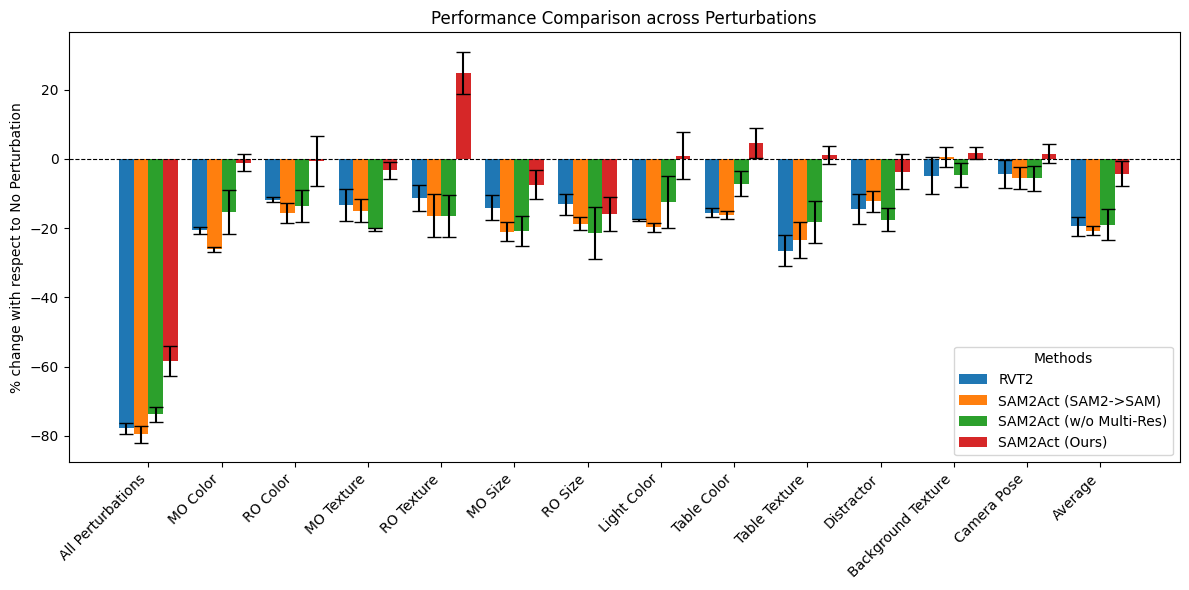

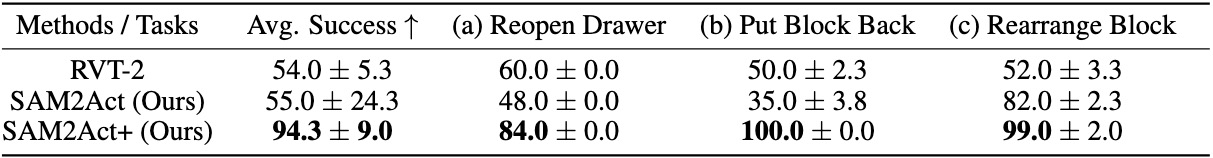

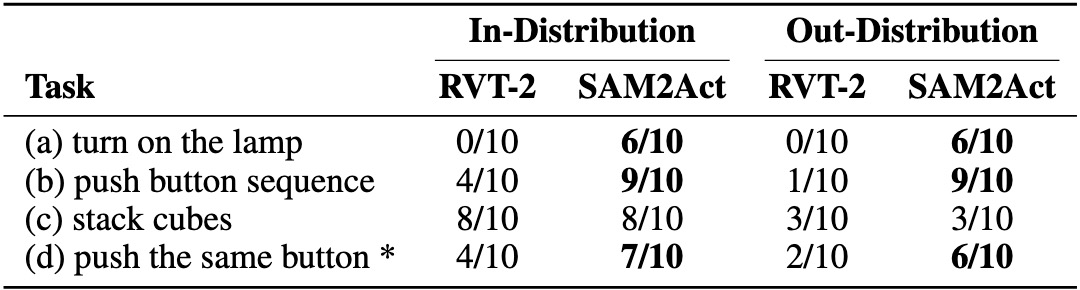

Robotic manipulation systems operating in diverse, dynamic environments must exhibit three critical abilities: multitask interaction, generalization to unseen scenarios, and spatial memory. While significant progress has been made in robotic manipulation, existing approaches often fall short in generalization to complex environmental variations and addressing memory-dependent tasks. To bridge this gap, we introduce SAM2Act, a multi-view robotic transformer-based policy that leverages multi-resolution upsampling with visual representations from large-scale foundation model. SAM2Act achieves a state-of-the-art average success rate of 86.8% across 18 tasks in the RLBench benchmark, and demonstrates robust generalization on The Colosseum benchmark, with only a 4.3% performance gap under diverse environmental perturbations. Building on this foundation, we propose SAM2Act+, a memory-based architecture inspired by SAM2, which incorporates a memory bank, an encoder, and an attention mechanism to enhance spatial memory. To address the need for evaluating memory-dependent tasks, we introduce MemoryBench, a novel benchmark designed to assess spatial memory and action recall in robotic manipulation. SAM2Act+ achieves competitive performance on MemoryBench, significantly outperforming existing approaches and pushing the boundaries of memory-based robotic systems.

$$ P\bigl(o_{t+1} \mid o_1, a_1, \dots, o_t, a_t\bigr) \;=\; P\bigl(o_{t+1} \mid o_t, a_t\bigr). $$

This assumption implies that knowing only ot and at is sufficient to predict \( o_{t+1} \). However, in our tasks, we design scenarios where two distinct action histories lead to the same observation ot, but require different subsequent actions. This forces the agent to recall which action history led to ot to perform the correct next action. Furthermore, we standardized the language instructions to prevent unintentional leakage of spatial information that could aid the model in memory-based tasks. These principles guided the development of our spatial memory-based tasks.

@misc{fang2025sam2act,

title={SAM2Act: Integrating Visual Foundation Model with A Memory Architecture for Robotic Manipulation},

author={Haoquan Fang and Markus Grotz and Wilbert Pumacay and Yi Ru Wang and Dieter Fox and Ranjay Krishna and Jiafei Duan},

year={2025},

eprint={2501.18564},

archivePrefix={arXiv},

primaryClass={cs.RO},

url={https://arxiv.org/abs/2501.18564},

}